Who's Going to Succeed With AI?

The same companies that have been succeeding with everything else. Here's how.

As AI changes the world, it's going to change the business world.

The pattern from history is clear: General-purpose technologies - those rare ones with impact broad and deep enough to accelerate the normal march of economic progress - don't maintain the status quo. They disrupt it. They create new winners and losers, enable the rise of new superstars and cause some (many? most?) established giants to stumble and fall. One well-documented example: the American manufacturers at the top of their industries when factories started electrifying early in the 20th century were usually not the ones on top once electrification had wrought its changes (conveyor belts, assembly lines, overhead cranes, individually-motorized machines, and so on).

Note that very few of these disrupted manufacturers were in the steam engine business. In other words, they weren't making gear that became obsolete because of the new technology. We expect such companies to go out of business, to the point that the phenomenon has become a cliché: business school students are warned not to be in the “buggy whip” business when the automobile comes along.

Electrification's impact was felt far beyond the power equipment industry; the blast zone of the new technology extended throughout the manufacturing sector. Similarly, AI's disruptive impact won't be confined to the high-tech industry. It won't just change competition among software and hardware makers. It will do that (it already is!) but it will also change competition, industry structure, and the list of leading companies in just about every industry.1

Ensuring that Disruption Happens to the Other Guy

Some investors are betting that disruption will be brought to many historically stable sectors via AI-centric roll-ups. As the Financial Times puts it, “Top venture capital firms are borrowing a strategy from the private equity playbook, pumping money into tech start-ups so they can “roll up” rivals to build a sector-dominating conglomerate.” Law firms, wealth and property managers, and accountancies are already being rolled up and stitched together via AI, and I'm confident there's much more to come here.

It remains to be seen if all of this rolling up will work. But it doesn't remain to be seen if established industries are getting disrupted by tech-savvy newcomers. In The Geek Way and frequently here I’ve written about companies like Netflix, Anduril, Stripe , SpaceX, and Tesla, which are doing to the incumbents in their industries about what Alexander the Great’s army did to all the empires it faced.

I've also written a lot about what these geeks are doing differently. Here I want to distill that down and apply it specifically to AI, which in the eyes of the geeks is a gigantic big deal. But at the same time it’s also no big deal to them, because they’re confident that they can harness its power and be the disruptors instead of the disruptees.

There have been plenty of other big-deal technologies already in the 21st century: the cloud, smartphones, Web 2.0, and machine learning, to name a few. Geek companies like the ones listed above have done a fine job overall at incorporating these novel tools. To say the same thing a different way, they've learned how not to experience new technologies as disruptive. Instead, they've learned how to make them disruptive to the other guy.

In this case, “the other guy” is the set of competitors that aren’t as good at bringing on board powerful new techs. The geek art and skill of successfully incorporating new technologies, one after the other, becomes an ever-growing competitive advantage in a world of rapid technological change. Which is the world we’re living in now.

This Is How They Do It

So how do the geeks do it? Here’s how. Here's my grievous oversimplification handy summary of how geeks work with powerful new technologies, using modern AI as an example. It’s an eight-step process

(1) Communicate the Expectation, Again and Again and Again. A big, often-underestimated part of bringing in new technology is changing norms. “We develop for mobile first now, not desktop.” “We’re using general machine learning models now, and doing less feature engineering.” “We’re using Slack to collaborate, not email.”

My friend Erez Yoeli, coauthor of the great applied game theory book Hidden Games, is currently working on a book about how to create a norm — a widely expected behavior that’s maintained through community policing. He stresses the importance of repeating that the new behavior is in fact expected; that we’re doing things this way now. It’s striking how many business leaders say that they had to learn to say the same thing over and over (well past the point, I get the impression, when they were sick of hearing themselves say it). So here are a few sample sentences about the new norm of being all-in on AI. Get ready to say them many many times:

“We are an AI-centric company. We use it wherever appropriate to help us get our work done better and faster. We use it to improve our offerings, our customer service, our internal operations — everything. AI is going to be a major focus and area of investment for us in the coming years.”

(2) Make AI an OKR. Bosses repeat themselves a lot, and say that lots of things are important. Which ones do they really truly care about? The ones that they assign as OKRs (objectives and key results).

OKRs are the things that you’re measured on, and the things you discuss with your boss during reviews. But as venture capital legend John Doerr stresses in his book Measure What Matters, they shouldn’t be too directly tied to compensation. Doing so often leads to all kinds of juke-the-stats shenanigans. As Doerr writes “In today’s workplace, OKRs and compensation can still be friends. They’ll never totally lose touch. But they no longer live together, and it’s healthier that way.”

I think of OKRs as short, clear lists of the norms that are truly desired by the leadership of an organization. When the CEO talks about topic X all the time but doesn’t include it as an OKR, I’ve come to believe that X is a topic that they’re expected to talk about but don’t really care about all that much. As Doerr’s title implies, after all, if it mattered they’d be measuring it.

(3) Create a Minimum Viable Plan. I’ve seen a lot of companies build their portfolio of AI efforts via a largely bottom-up approach. They let many flowers bloom via pilot projects, demo days, and so on. These efforts can build awareness and enthusiasm, but they risk dissipating the energy required to succeed with AI.

Bringing in a powerful new technology is hard work, both technically and (especially) organizationally. It's like overhauling a ship while sailing it into battle. So you've got to pick your spots carefully. What new equipment does the ship need the most? The engines? The guns? The radar? Doing anything other than the most important upgrades is a good way to find yourself unprepared for what's ahead.

Same thing with AI. Since you're going to spend effort, energy, resources, and organizational capital getting AI to work anywhere, you might as well get it to work where it can be most effective - where it can most help you speed things up, take care of customers, improve quality, or accomplish other key goals in key parts of the business.

How do you know where your AI hotspots actually are? I prefer the task-based approach pioneered by my cofounders Erik Brynjolfsson and Daniel Rock and applied to AI in this paper published in Science by Rock and a team from OpenAI. The key insight here is that AI doesn't affect entire jobs; instead, it's applied to specific tasks within those jobs. A task-based analysis of AI opportunities is therefore at the right level of detail, and can also be abstracted upward to jobs, job families, processes, functions, divisions, and so on.

A task-based analysis yields a precise heat map of an organization’s opportunities to create value with AI. Such a heat map ensures that everyone involved in AI decisions is starting with the same information, and with a shared understanding of how the technology can best help them. This gives them a good foundation from which to set priorities and make resource allocation decisions.

(4) Learn by Doing. I've watched many organizations, especially large ones, spend many months planning and preparing their AI efforts instead of embarking on AI efforts. There are lots of seemingly good reasons to proceed cautiously, from data leakage and privacy concerns to ethical considerations to the fear of doing something pretty dumb like deploying a customer-facing chatbot agrees to sell cars at a steep discount. But there's one reason to dive in quickly and start doing things that trumps all of these concerns: Doing things is the best way to learn what actually works. I'll go one step further: for something as new and fast-changing as AI, doing things is the only way to learn what actually works.

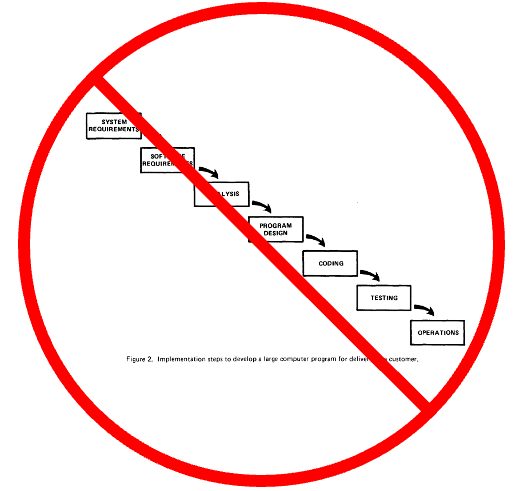

The dominant 20th-century way to manage big, complicated projects came to be called the waterfall method: "Do this, then do this, then do this, and so on." It was heavy on up-front planning and analysis, requirements documentation, and so on, and very light on experimentation, feedback, iteration, and incorporating new information as it came up. Technologist Clay Shirky’s brutal summary of waterfall is “a pledge by all parties not to learn anything while doing the actual work.”

In the 21st century the geeks have rebelled against waterfall with agile methods, an explicitly cyclical and iterative project management approach that emphasizes constantly incorporating and responding to new information. There are many varieties of Agile by now.2 To me, they all seem fundamentally similar; They're all based on the fundamental belief that to build successfully you have to learn effectively, and that the best way to learn is to do.

Now, whenever I see a large organization spending a lot of time thinking about AI, I try to nudge them to actually start doing at least some of the AI that resulted from their planning efforts.

(5) Track Progress. These days, it's possible without too much heavy lifting to observe how AI efforts are unfolding in real-time. Are people using the technology more week by week? Are their prompts getting better and showing evidence of more sophisticated use? What are the biggest power users doing differently, and what can we learn from them?

The geeks are freaks about instrumentation— about constantly measuring the things they care about — for lots of reasons. For one, instrumentation and OKRs go hand-in-hand. For another (and this is almost so obvious that it doesn't need to be said) without our instrumentation, you're flying by the seat of your pants.

That phrase comes from the early days of aviation when pilots lacked good instruments and instead had to look out the window (which got less effective when it was cloudy or dark) and also learn to absorb important information from how their controls and seats were vibrating. “Flying by the seat of your pants” came to mean having only sparse information when you really want plentiful information.

The geeks think it's ludicrous to try to run an organization that way. As Stripe CEO Patrick Collison explained to me when I was researching The Geek Way, “I’m always pushing people, how will we measure that? How do we know whether it’s working? If you have the measurement, why is it increasing or why is it not increasing?”

(6) Understand AI’s Impact. This is an aspect of track progress, but it's it's so important that I'm calling it out separately. What I mean here is “identify the causal impact AI adoption has had on key performance measures.” In other, non-economist words, separate out the changes brought by AI from all the other things going on inside and outside the organization.

When phrased that way, the challenge becomes clear. There are always a lot of other things going on inside and outside organizations, and it's hard to separate signal from noise, correlation from causation, selection effects from treatment effects, and so on. The world is a messy and chaotic place — one in which it's hard to confidently say “this caused that” as opposed to “this was associated with that,” or “that happened after we did this.”

But we desperately want to make those causal statements because we want to know what's driving the changes we observe. It's great that productivity, customer satisfaction, and employee churn all went down after new AI was deployed in a call center. But was the AI responsible for all these changes, or only some of them? Furthermore, if productivity went up by, say, 30%, was the AI responsible for 100% of that 30%? Knowing the answers to these questions helps us answer important questions: questions like how much we should be willing to pay the vendor, how eager we should be to deploy the technology more broadly, and so on.

As I wrote here earlier, there's good news on this front: we can now get much better answers to these questions than was the case twenty-five years ago. One of the most important developments in 21st-century economics is a huge leap forward in our ability to do causal inference—to say confidently what caused what.

Most organizations do causal inference all the time in one domain: they A/B test proposed changes to their websites and apps to understand which changes improve things and which don't.3 But the causal inference toolkit hasn't yet spread much more broadly than that. As a result, most organizations today are in a weird spot. They're much more rigorous and well-informed about changes to their homepage than they are about critical organizational changes like AI adoption. We now have the data and the causal inference tools we need to do much better on the latter. So we should do much better on the latter. Organizations should be clear on the causal impact of their AI efforts.

(7) Do More of What’s Working. The biggest benefit from doing proper causal inference is getting hot leads on how to improve your performance. In one case, a company's initial analysis showed that coding assistance didn't reduce the time required to complete programming tasks. But once we ran the same data through our causal inference engine and included appropriate controls, we saw that the most senior engineers actually were able to use AI to shrink cycle times. Once we learned what they were doing differently, we were able to spread those changes and shorten cycle times across the board.

Getting that causal inference right, though, is non-trivial. It takes experience, judgment, and PhD-level training. Among many other things, you've got to set up your analyses correctly to do the appropriate causal identification. ChatGPT just gave me a great analogy:

Think of causal inference as the full journey from question to conclusion.

Causal identification is like the passport check at the border:

If your identification strategy doesn’t pass, the rest of the trip (inference) isn’t allowed.

In many domains, causal inference has already led to significant improvements. For example, after the causal link between early childhood exposure to lead and later-in-life violent criminality was established, lead abatement policies got enforced more strictly. Once it became clear that car seats really did protect kids, all 50 states mandated their use. On the other hand, once it became clear that cash bail didn't lower crime rates or criminal court absenteeism, several states decided to rely on it less.

As these examples indicate, There's been a large recent upswell in evidence-based policy making (where “based” means “based on solid causal inference”). I think and hope that a similar upswell is building in the business world. It's time to bring causal inference from econometrics to the enterprise.

(8) Keep Doing It Businesses aren't going to be finished with their AI efforts any time soon, any more than they're finished with any other flavor of technological or organizational change. The work of succeeding with AI is going to be ongoing, iterative, learning-heavy work. Organizations that get good at this work (IMO by getting good at all the steps listed above) here have by far the best chance of getting to the top and staying there in the turbulent years ahead.

This Is How We Help You Do It

If you'd like some help going through the steps above, we’d love to hear from you. The “we” here is Workhelix, a startup I cofounded with Erik Brynjolfsson, James Milin, and Daniel Rock.

Our company’s tagline is "Accelerate your AI transformation." We take a lot of relevant research in data science, machine learning, economics, and cultural evolution — including our own — embed it into code, and make it available to our customers via a tech-enabled services approach. This means that we deploy both engineers and strategists to our customers so that they can get started with the above process as quickly as possible. These forward-deployed folks work with our team of data scientists, engineers, economics (geeks, all of them) back at HQ.

Workhelix's goal is to combine the best practices pioneered by modern business geeks with the most potent tools developed by the 21st century's causal inference geeks, then serve them out to customers for maximum impact and insight with minimal hassle. If that sounds interesting, let’s talk.

I put "just about” in that sentence because I think there are some industries in which incumbents are protected by such a dense regulatory thicket that they won’t be meaningfully disrupted by upstarts and outsiders, at least in the short-to-medium term. I was talking with the CEO of a large insurance company a while back who told me "Look, Andy, I'm not worried about your geeks. They can't get past all the layers of regulation. I don’t need AI to hold them off. I want to use AI to take share away from my competitors."

And lots of internecine shouting

Most don’t

Excellent essay. One comment and one question.

Comment: Safety engineers will always apply a waterfall approach, because they need to do careful scenario-based hazard analysis and mitigation. They learn instead by doing pilot studies, building prototypes, and testing them in safe settings (e.g., test ranges). Because LLM-based systems have a significant failure rate, any process that carries risk (e.g., inventory management) needs to be carefully designed and tested. An LLM is like an aircraft that randomly loses power for a few minutes out of every hour. It is possible to learn how to fly such a plane, but it requires great care. One strategy (which I saw at a recent IBM presentation) is to only take action based on code emitted by the LLM. Code can be sanity-checked using formal tools from programming languages before being executed.

Question: LLM-based systems provide new abstractions (such as "agents") and new communication mechanisms (such as agent-to-agent communication, natural language communication with customers, suppliers, etc.). Can you point to work that is studying how the modern corporation might be re-designed using these new abstraction and communication mechanisms? I wonder if a task-based approach might automate fine-grained aspects of the corporation and miss the opportunity to restructure. In the back of my head, I'm wondering what the AI equivalent is of the need to redesign manufacturing processes using electricity.

I think a lot of this makes sense but have two quibbles. 1. Measuring and analysing causes sounds great but it will be very hard to get clear answers for some time - so need to take care not to introduce false certainty. 2. No amount of corporate planning will stop people experimenting. And in some cases, those will be the things that work. So again need to be careful not to stifle innovation with central planning.